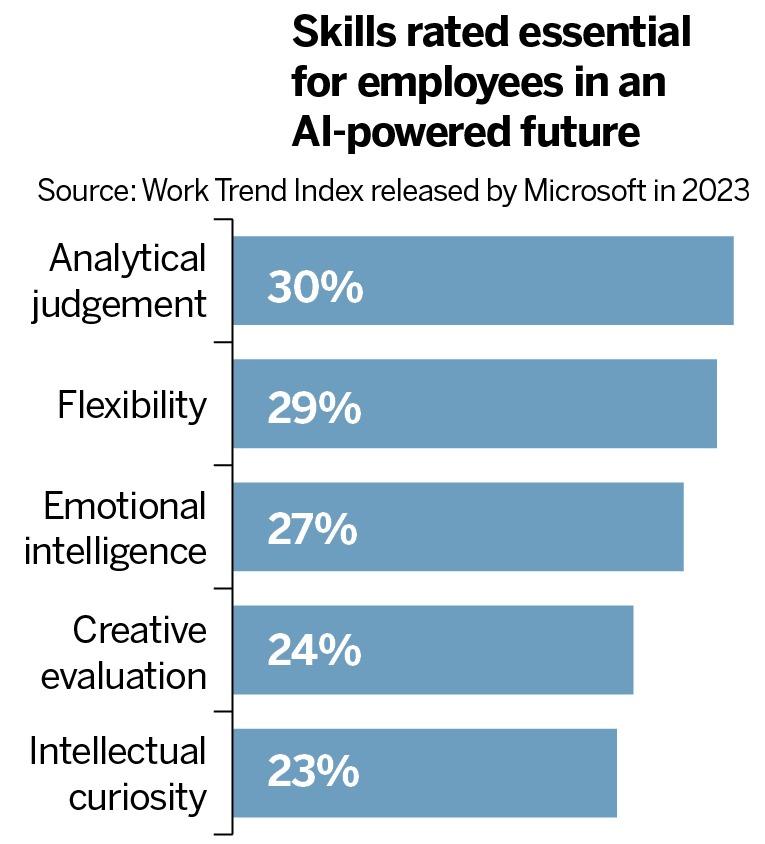

Could supersmart machines replace humans?

Rules and guardrails

As technology evolves at speed, society lags in evolving the rules needed to manage it. It took eight years for the world's first driver's license to be issued after the first automobile was invented in 1885.

Consumer downloads of ChatGPT soared to 100 million within two months of its release. The near-human intelligence and speedy responses of ChatGPT spooked even the Big Tech leaders. That prompted an open letter to "Pause Giant AI Experiments" for at least six months, from the Future of Life organization that has garnered 31,810 signatures of scientists, academics, tech leaders and civic activists since the petition's introduction in March.

Elon Musk (Tesla), Sundar Pichai (Google) and the "Godfathers of AI", Geoffrey Hinton, Yoshua Bengio and Yann LeCun, who were joint winners of the 2018 Turing Award, endorsed the critical threat to potential humanity extinction posed by runaway Al.

Despite the acute awareness of lurking pernicious actors, legal guardrails remain a vacuum to fill. "That's probably because we still have little inkling about what to regulate before we start to over-regulate," reasons Fitze.

The CEO of OpenAI, Sam Altman, declared before a US Senate Judiciary subcommittee that "regulatory intervention by the government will be critical to mitigate the risks of increasingly powerful models." The AI Frankenstein on the lab table terrifies its creators.

- China Coast Guard fleet patrols around Diaoyu Island

- Chongqing railway station opens panoramic observation deck

- Gansu kindergarten faces additive probe after blood lead levels alarm parents

- China's 3D-printed mini jet engine triumphs in pioneering flight test

- China Buddhist association issues statement on 14th Dalai Lama's reincarnation claims

- PLA conducts combat readiness patrols over Huangyan Island